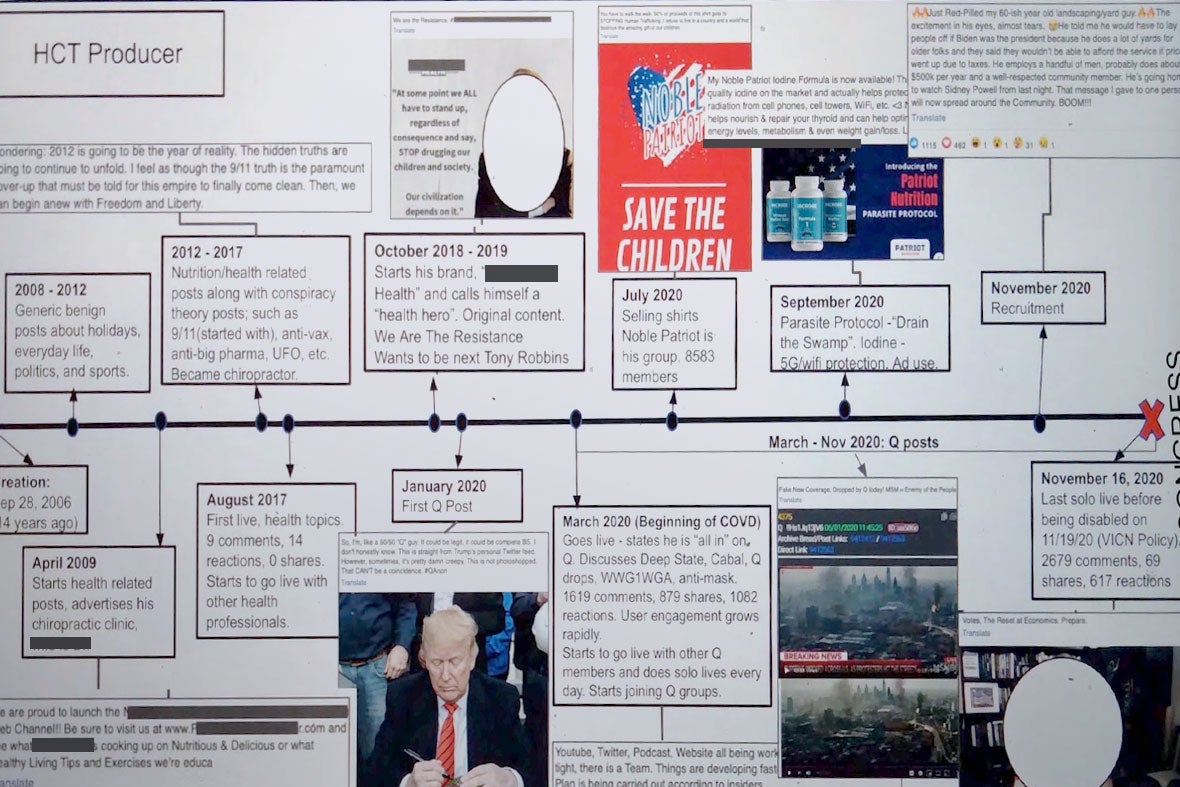

On Sept. 28, 2006, a young chiropractor, then about 30 years old, signed up for Facebook, where he posted about holidays and sports while promoting his private clinic. Fourteen years later, on Nov. 19, 2020, he was kicked off the platform after livestreaming U.S. election and QAnon conspiracies to his nearly 40,000 followers.

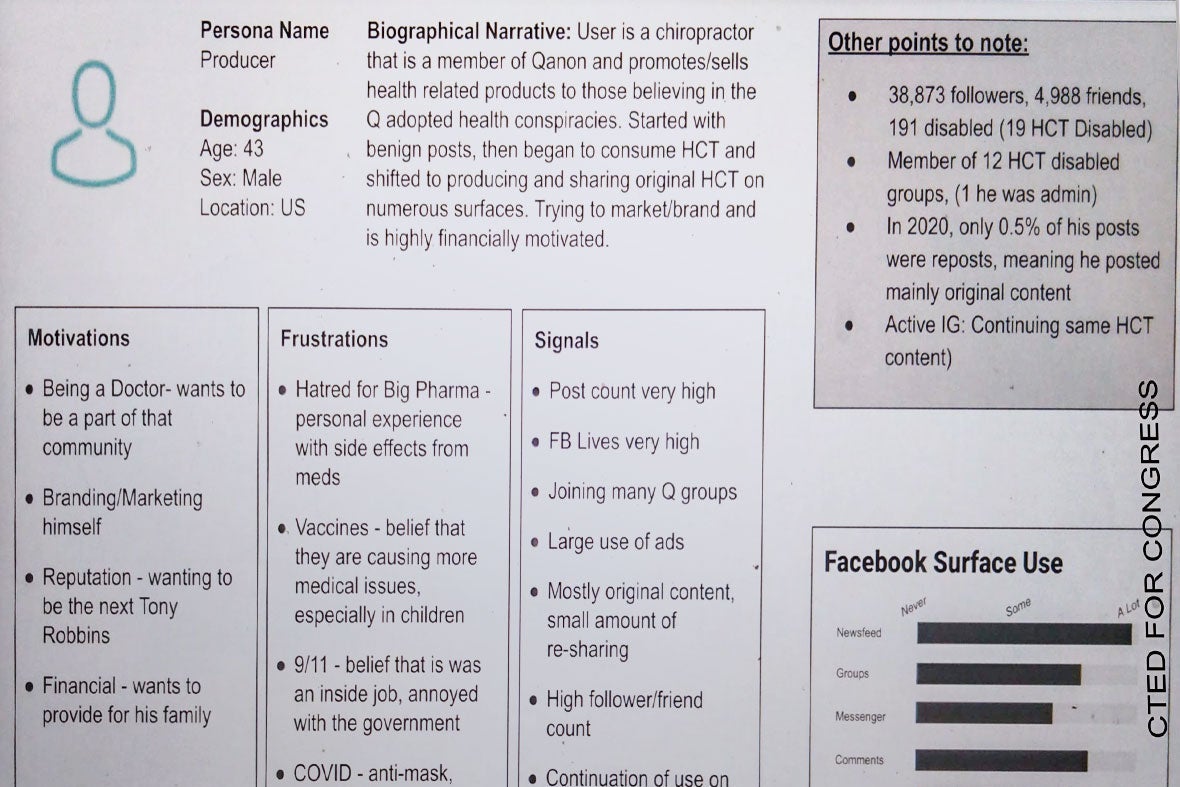

Facebook employees thought this anonymous user’s trajectory was worth analyzing. Recent disclosures made to the Securities and Exchange Commission and provided to Congress in redacted form by whistleblower Frances Haugen’s legal counsel show that Facebook’s Central Integrity team assembled detailed logs of two popular Facebook users’ activity over 14 years, as part of a study on misinformation across the platform. What made these two users notable was their transformation over time from early Facebook adopters to full-blown conspiracy theorists. The redacted versions received by Congress were reviewed by a consortium of news organizations, including Slate.

“User journey maps” of the chiropractor and one other person, a Southern home decorator with a religious bent, were part of an internal presentation titled “Harmful Conspiracy Theories: Definitions, Features, Personas,” which is undated but includes events from 2021. These maps show, through a timeline of key Facebook output, not only how the two users journeyed deep into fringe conspiracy theories but also how they themselves became massive vectors of misinformation, mastering Facebook’s architecture in order to distribute lies at scale.

For his first six years on Facebook, the chiropractor’s activity appeared relatively harmless. Things began to swing in 2012, when he posted a status nodding to 9/11 conspiracies. Over the next five years, as the Integrity team’s study detailed, his once-anodyne “nutrition/health related posts” would come alongside screeds that were “anti-vax” and “anti-big pharma” and even discussed UFOs. In August 2017, the chiropractor began livestreaming his thoughts on these subjects, sometimes with “other health professionals” as guests. In 2018, he began invoking the supposed mass drugging of American children, a conspiracy theory QAnon believers have echoed. Once the pandemic began, in addition to spreading QAnon content, he shared COVID lies in his livestreams as well as through an 8,500-member Facebook group and an ad account, both of which he created. By this time, the chiropractor was also selling T-shirts with the phrase #SaveTheChildren, which is popular with QAnon believers.

The chiropractor’s following offered him a larger platform for the distribution of junk medicine he concocted, which he marketed using the language of his new friends: mentioning his daughter when discussing the alleged failures of modern medicine, using the symbols of the U.S. flag and patriotism ads for his “treatments,” and introducing a “parasite” treatment with the phrase “Drain the body’s swamp!” By November 2020, the chiropractor was a hard-line election denier, posting about “red-pilling” his 60-year-old landscaper and directing him to listen to Sidney Powell, the lawyer involved in efforts to overturn the election on behalf of Donald Trump. On Nov. 16, he did a livestream, which garnered about a million views, to prepare his followers for the possibility that the “deep state” would “steal” the presidency from Trump. Three days later, he was permanently banned from Facebook.

The other user had a similar trajectory. She created her Facebook account in November 2007, when she was 53 years old, and spent the next eight years writing “Benign posts mostly of family, friends & religion,” as Facebook researchers described it. (One such post read, in part, “I have a burden for all to know sweet Jesus.”) At the end of 2015, she’d launched a home décor business and had also started writing a “few anti-left and anti-Islamic posts,” according to the Integrity team. From 2016 onward, she was resharing YouTube links about the “end times” and aligning herself with the alt-right. July 2019 was her tipping point toward full conspiracist: She began searching for “red pill” groups on the site and posting statuses that asked her followers whether they thought the moon landing was real and if they were “fellow ‘Q’ Followers.” Starting in March 2020, she was using her home décor business to promote QAnon theories and invite her friends to meetups at her business, while also creating two Q-themed FB groups. Each one amassed thousands of members; the home decorator messaged hundreds of users she didn’t know in order to recruit them to the cause, while also giving her group members tips on how to convert their family and friends. By October 2020, Facebook had banned her account.

The content from each user falls in a category the Facebook researchers labeled “harmful conspiracy theories,” or HCTs. These are extremist beliefs that can lead to real-world damages—for instance, anti-vaccine sentiments can lead to resurgences of deadly diseases, and the expansive pro-Trump QAnon conspiracy theory has led to physical violence. Facebook’s presentation exhibits anonymized personal statuses, group posts, graphics, sock puppet accounts, engagement metrics, and the behavioral patterns of members of conspiracy networks. It also recommends actions for curbing the reach of such users on Facebook, including targeting and halting the spread of HCT or HCT-adjacent posts early on; instituting strikes-before-removal systems for HCT Facebook groups and users who write original HCT content; reducing the visibility of such groups and the people who create them; and limiting the amount of friend requests, group invites, and messages that harmful conspiracists can send to other users at one time.

The slideshow is revealing in another way: It’s yet another glimpse at just how much data Facebook has on its users. The trove of info Facebook’s Integrity team collected and presented on the chiropractor, in particular, is staggering: Researchers highlighted his statuses, ads, and groups as well as private posts within these groups—along with engagement metrics for all these—across a 14-year span. They were also able to track how much of his content consisted of reposts, how many groups he was a part of (including those that were eventually disabled), and the number of banned and active followers he had. They could track the creation dates and post counts of the chiropractor’s self-administered groups and ad networks, including the amount of money splurged on certain advertisements. And they could nail down the turning points in his trajectory from everyday user to active conspiracist, as well as the moments he began branching into different media forms. These points include his first health-related livestream in August 2017, the creation of his “health” brand in October 2018 for selling his alternative homemade medicines, his statement that he was “all in” on Q in March 2020, and his creation of same-name Facebook groups and ad accounts used to promote his quack science by that summer. A lot of this info was technically public, but it would be hard for even seasoned third-party researchers to assemble so full a portrait.

Using this data, Integrity labeled this man an “HCT Producer,” meaning someone with a high follower count who distributes posts filled with self-authored disinformation at a rapid pace, oversees multiple groups that inculcate these ideas, and claims to have secret knowledge that the general population doesn’t. The presentation recommended that Facebook take action on HCT producers by imposing a strike-to-suspension policy, barring such users from creating multiple online identities or streaming during a suspension (and taking down alternate accounts when necessary), and monitoring their ads accounts more closely, if applicable. These recommendations were meant to work in concert with the aforementioned broader strategy around halting the spread of harmful conspiracies. When I asked Facebook whether it had implemented any of the specific policy recommendations outlined in the slides, Meta spokesperson Joe Osborne provided this statement:

We invest in research to help us discover gaps in our systems and identify problems to fix. Our policies against violence-inducing conspiracy networks such as QAnon are informed by the research we do and are designed to target how adherents to the conspiracy network can organize on the platform. We remove Pages, Groups, Instagram accounts that represent organisations like this and also prohibit ads that praise, support or represent QAnon from running on our platform. Since August 2020 we’ve removed about 3,900 Pages, 11,300 Groups, 640 events, 50,300 Facebook profiles and 32,500 Instagram accounts for violating our policy against QAnon. We also provide people who search for terms related to QAnon with credible resources and information.

Facebook, obviously, isn’t solely responsible for the kind of ugliness that the chiropractor and the home decorator spread on the platform. But it is clear from the presentation that the company is aware that as such users have become more extreme, they have built larger audiences, in more mediums and formats, and used those tools with increasing sophistication both to earn money and spread hateful ideas and disinformation. And in these cases, they only earned bans when Facebook was under heavy scrutiny for its handling of fringe actors attempting to spread lies on the eve of a presidential election.

What should Facebook do about harmful conspiracy theories? The researchers’ recommendations for stemming this problem focus on closing the “gaps HCT actors exploit,” such as the ability to create sock puppet accounts and backups in case of deletion and the ability to mass-message random users and invite them to HCT-themed groups. The authors pushed to “dedicate additional policy resources on conspiracies harmful enough to warrant our strongest enforcements.” At the same time, they allowed that the harms HCTs can inflict—alongside “real world acts of physical violence”—include “excessive expenditure of FB resources” and “undue reputational risk.” At this point, it’s hard to consider such things harms against Facebook, which allows figures like the chiropractor to thrive in the first place. “Self-harms” might be more apt.

Future Tense is a partnership of Slate, New America, and Arizona State University that examines emerging technologies, public policy, and society.